HapTurk: Crowdsourcing Haptics

How do we crowdsource haptics? Platforms like Amazon's Mechanical Turk have enabled rapid, large-scale feedback for text, graphics, and sound. Designers can collect impressions in minutes; researchers run experiments within hours. But sending haptic devices out at such a scale is infeasible.

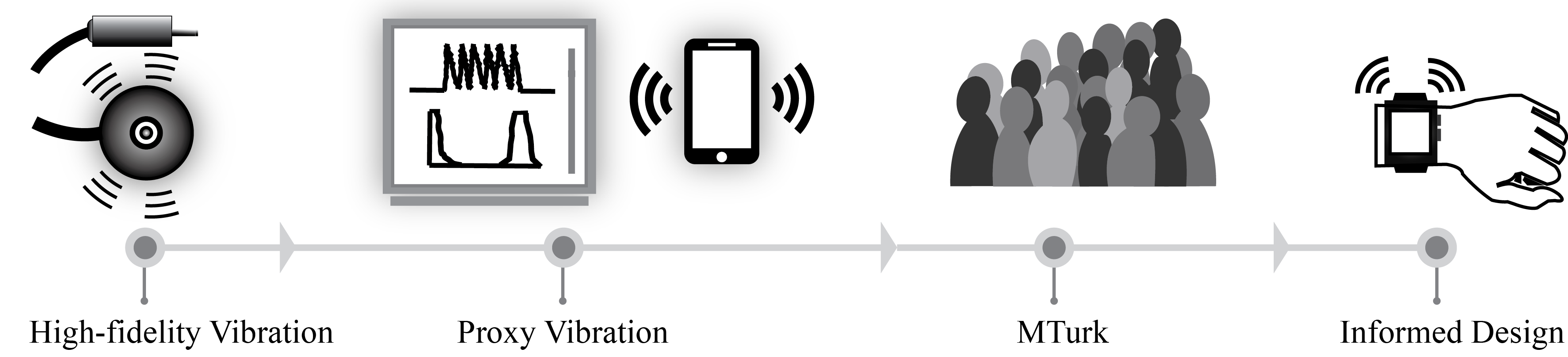

We use two proxy modalities to convey affective qualities. We design a new visualization, and provide a method to send low-fidelity phone vibrations over MTurk, and analyze their ability to represent high-fidelity vibrations (like those used for wearable devices). We compared local and remote studies to identify what affective characteristics could be conveyed using proxy modalities, finding that our new visualization is more effective than typical waveforms and that our proxies are complementary, each with advantages for certain different affective qualities. We also present guidelines for combining modalities to be more effective in future crowdsourcing efforts.

- HapTurk: Crowdsourcing Affective Ratings for Vibrotactile Icons. 2016. Oliver Schneider, Hasti Seifi, Salma Kashani, Matthew Chun, Karon MacLean. CHI 2016. San Jose, CA, USA.

Paper: PDF ACM

Video: YouTube

Presentation: YouTube

Abstract

Vibrotactile (VT) display is becoming a standard component of informative user experience, where notifications and feedback must convey information eyes-free. However, effective design is hindered by incomplete understanding of relevant perceptual qualities. To access evaluation streamlining now common in visual design, we introduce proxy modalities as a way to crowdsource VT sensations by reliably communicating high-level features through a crowd-accessible channel. We investigate two proxy modalities to represent a high-fidelity tactor: a new VT visualization, and low-fidelity vibratory translations playable on commodity smartphones. We translated 10 high-fidelity vibrations into both modalities, and in two user studies found that both proxy modalities can communicate affective features, and are consistent when deployed remotely over Mechanical Turk. We analyze fit of features to modalities, and suggest future improvements.