Design Tools for Affective Robots

Collaborators: Paul Bucci, David Marino, Laura Cang, Karon MacLean

Collaborators: Paul Bucci, David Marino, Laura Cang, Karon MacLean

As robots enter our daily lives, they need to communicate believably, that is, emotionally and on human terms. When physical agents gesture, touch, and breathe, they help humans and robots work together, and even lead to applications like robot-assisted therapy. However, making a believable robot takes a huge amount of time and expertise - animators must avoid the uncanny valley.

We developed a hardware design system for DIY robots called CuddleBits. Designers and makers can create and remix CuddleBits extremely rapidly to try new forms while experiencing their believability.

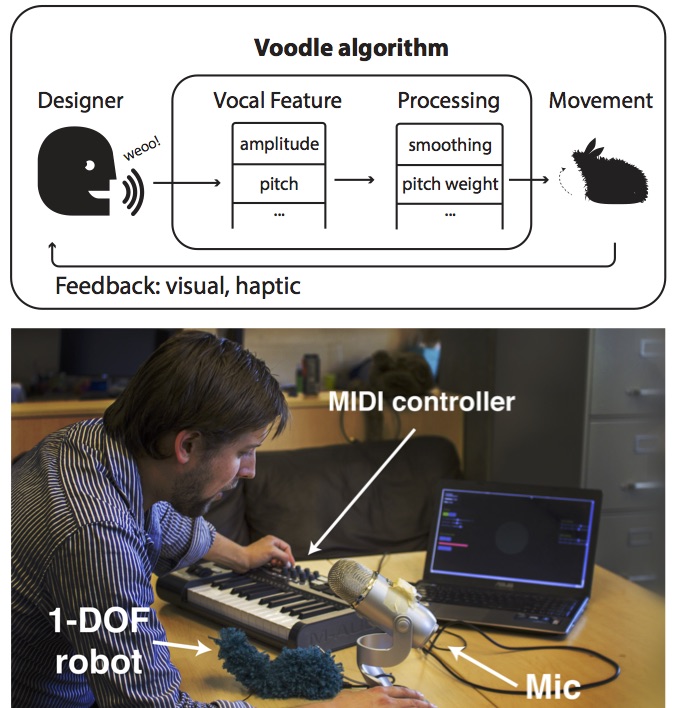

We also introduce Voodle (vocal doodling), a design tool that maps vocal features to robot motion. Voodle lets performers act out robot behaviours vocally, allowing them to imbue CuddleBits with a spark of life.

Sketching CuddleBits: Coupled Prototyping of Body and Behaviour for an Affective Robot Pet. Paul Bucci, Xi Laura Cang, Anasazi Valair, David Marino, Lucia Tseng, Merel Jung, Jussi Rantala, Oliver Schneider, Karon MacLean. 2017. CHI '17, Denver, CO, USA.

Voodle: Vocal Doodling to Sketch Affective Robot Motion. David Marino, Paul Bucci, Oliver Schneider, Karon MacLean. 2017. DIS '17, Edinburgh, UK.

CuddleBit Video

Voodle Video

CuddleBit Abstract

Social robots that physically display emotion invite natural communication with their human interlocutors, enabling applications like robot-assisted therapy where a complex robot's breathing influences human emotional and physiological state. Using DIY fabrication and assembly, we explore how simple 1-DOF robots can express affect with economy and user customizability, leveraging open-source designs.

We developed low-cost techniques for coupled iteration of a simple robot's body and behaviour, and evaluated its potential to display emotion. Through two user studies, we (1) validated these CuddleBits' ability to express emotions (N=20); (2) sourced a corpus of 72 robot emotion behaviours from participants (N=10); and (3) analyzed it to link underlying parameters to emotional perception (N=14).

We found that CuddleBits can express arousal (activation), and to a lesser degree valence (pleasantness). We also show how a sketch-refine paradigm combined with DIY fabrication and novel input methods enable parametric design of physical emotion display, and discuss how mastering this parsimonious case can give insight into layering simple behaviours in more complex robots.

Voodle Abstract

Social robots must be believable to be effective; but creating believable, affectively expressive robot behaviours requires time and skill. Inspired by the directness with which performers use their voices to craft characters, we introduce Voodle (vocal doodling), which uses the form of utterances -- e.g., tone and rhythm -- to puppet and eventually control robot motion. Voodle offers an improvisational platform capable of conveying hard-to-express ideas like emotion. We created a working Voodle system by collecting a set of vocal features and associated robot motions, then incorporating them into a prototype for sketching robot behaviour. We explored and refined Voodle's expressive capacity by engaging expert performers in an iterative design process. We found that users develop a personal language with Voodle; that a vocalization's meaning changed with narrative context; and that voodling imparts a sense of life to the robot, inviting designers to suspend disbelief and engage in a playful, conversational style of design.